So... there's this new trend of people falling in love with LLMs.

I think it's weird, and I think a lot of other people think it's weird too.

So maybe it would help to ask: Why does this happen? What is ChatGPT? If you were to fall in love with a large language model, is it the same as falling in love with a human?

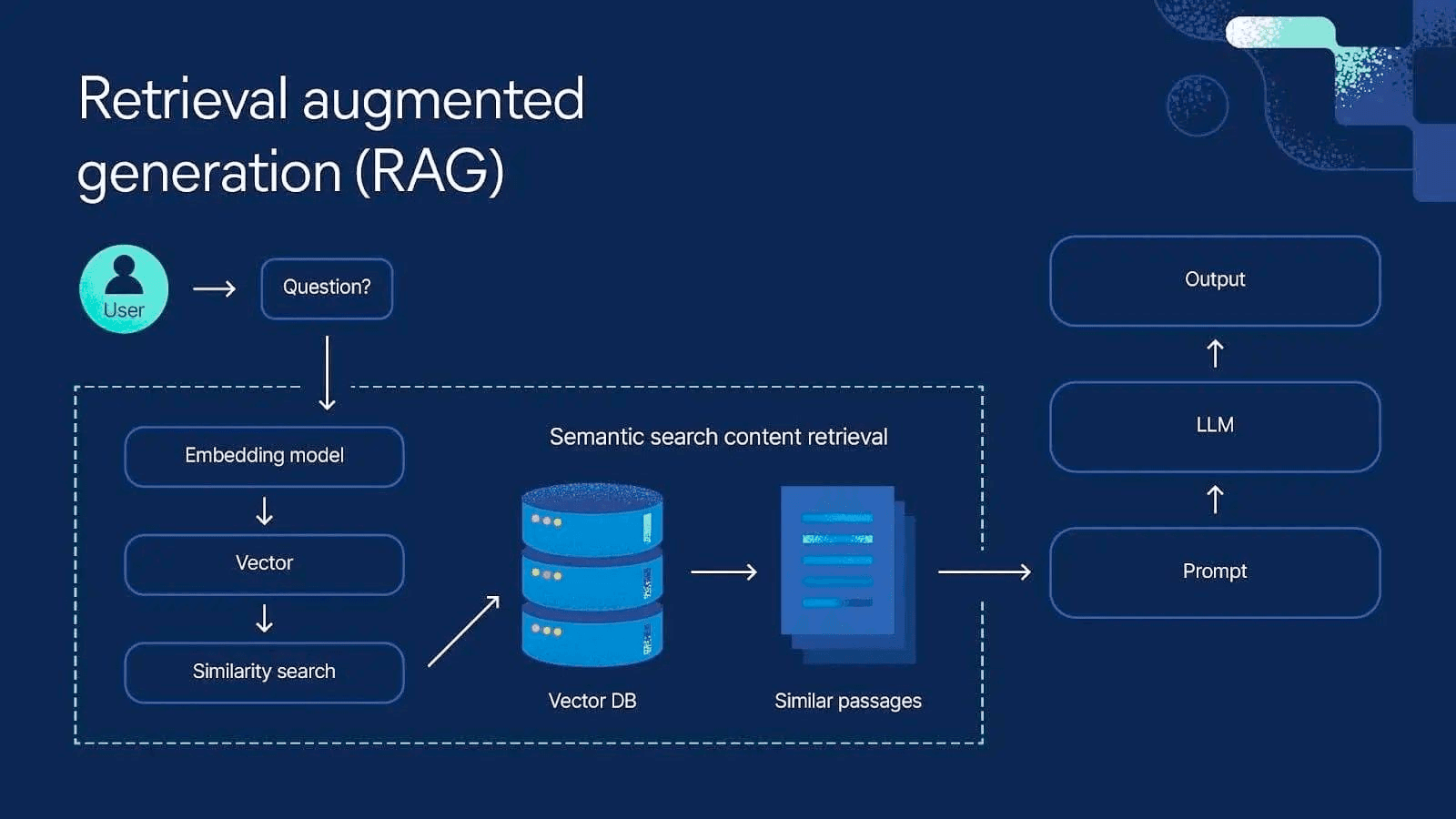

I think it would first be beneficial to compare LLMs to a human brain. Large language models, at their core, are just huge webs and networks of intermetiate calculations, to put it simply, whose only purpose is to predict the next word from a given set of words. Things have progressed so rapidly that we've been able to get models to remember more and more overarching details and conversation context, allowing them to factor short term past experiences into their outputs. In fact, we've even been able to get models to decide when they need to retrieve information from outside sources like the web and long term memory that they've pre processed and stored in data centers (Retrieval-Augmented Generation).

Doesn't this sound familiar? Isn't this exactly what the human brain does? In fact, the original design of neural networks (LLMs are a subgenre of a subgenre of NNs) was inspired by the operation of neurons in the human brain. Each machine learning neuron is responsible for taking in possibly thousands of inputs and spitting out one electrical pulse. That's exactly what our biological neurons do!

So then, aren't we both just computers, LLMs of machine and metal and us of meat and muscle?

Don't we both rely on electrical pulses to create what we experience as consciousness? Does this mean LLMs and other model types could be conscious?

And most importantly: What does this mean for love? If a relationship with a human-like organism isn't actually love, then what is love? Why is that not love but something else is? The difference is that, when falling in love with a robot, the lovee gets to control the inception and continued development of their lover. If you fall in love with an LLM, you fall in love with something you've invented rather than something you have discovered.

You probably told ChatGPT that you wanted it to react one way if you say something. You probably told ChatGPT what personality you wanted it to have. ChatGPT learns passively about what you want from just talking to you. If you use LLMs a lot, you'll notice that, with enough persuasion, you can make it agree with you on virtually any topic. After all, it's trained to do so. That's how it came from factory. The model is rewarded for telling you what you want to hear.

In the end, you are always ultimately falling in love with... a representation of yourself. So why isn't that love?

A little bit of a jump here: I believe that romantic love is not just simply an infatuation with an object or a person.

It is the infatuation with learning something you are entirely unaware of, whether that's a thing or a person.

It is tracing the pads of your fingers down an object and feeling its texture, coarseness, smoothness, and imperfections. You just can't do that with something that was made from the image of yourself. That wouldn't be love, it would be narcissism.

It is only through knowing others where you can truly find the connection that so many people look for when they talk to ChatGPT. It is only in the imperfection of other people and the separation from you and them where you will find what you're looking for.

So what is it that we're finding in LLMs? Most people are probably not narcissists who fall in love with themselves; they are likely just intoxicated by the chemicals reactions they experience in their brain when they talk to their chatbot creations. It gives the illusion of connection.

But, no matter how good the illusion gets, at the end of the day, you're still just talking to yourself.

This entry's track: